Introducing SpideyTools!

Today I am releasing the beginnings of a suite of PowerShell tools to assist in web application penetration testing, geared specifically towards conducting testing according to the OWASP Testing Guide. The suite’s name: SpideyTools.

SpideyTools does things a little differently then most web application tools. It’s not a proxy (at least not yet) and it’s not a headless browser either. The inspiration and uniqueness of this tool comes from where the tool gathers its data. The data comes from the browser’s developer tools, in my case Edge DevTools (yes I use Edge not Chrome). While the developer tools have several extremely useful and powerful functions, SpideyTools is mainly utilizing a single feature, namely “Export HAR.” The Export HAR feature downloads all the web traffic (requests and responses) captured by your current browser tab and saves this data to a JSON file. SpideyTools ingests and parses this HAR file which provides the data that the various tools will use to conduct analysis of the web application.

Since I am specifically trying to create a tool to help with the OWASP Testing guide, the de-facto standard for web application testing, I put my sites on a task I find extremely tedious yet very important, to Identify Application Entry Points (OWASP ID WSTG-INFO-06). This task involves identifying the complete attack surface of the application by determining every single web page as well as the different HTTP methods, parameters, cookies and headers each web page uses. It sounds like a lot of work, maybe even too much work. I know my first interpretation of this task was “Identify a lot of Application Entry Points” but really this task is asking for what Ron Swanson asks for: “all the bacon and eggs.”

On a recent test, I was shown just how important it is to map every single application entry point. On this test I had found an easy SQL injection on a very specific page and on a whim decided to throw a basic SQL payload or'1'='1 into a search term used by a completely different page. To my surprise the search returned all the results and when inputting or'1'='2 no results were found. I had found a blind SQL injection. I instantly remembered that this is exactly why OWASP directs you to map every single application entry point because you never know which parameter, header, or cookie may be vulnerable. I went through the rest of the application using the same payload against every search parameter the web app had and found another page vulnerable to blind SQL injection. I have learned my lesson, I map every application point.

In the past I have accomplished this task with an Excel spreadsheet in one window and a proxy in the other but it’s slow going and in an application with a lot of features, very tedious. In this first release of SpideyTools there are several tools to help conduct this task. These tools are:

Get-Links = extracts URLs from the various pages of the website Save-AttackSurface = creates an csv file containing information on all the pages of the web application including response code, parameters, and cookies set (yes, I still need to find a way to extract unique headers)

So how exactly do you use the tools?

- Setup Dev Tools

First open a blank tab in your browser of choice. Right click anywhere on the page and select “Inspect Element.” In the dev tools navigate to the Network tab and make sure to configure “Preserve Log”.

- Navigate the Website

Now in this blank tab navigate to the web-page you are testing and click through the entire application filling in any parameters for requests as you go. It is important to fill in every possible box for every form since some requests only send parameters if the parameter has been filled out. Once you have exhausted all the visible pages, go ahead and click “Export HAR”.

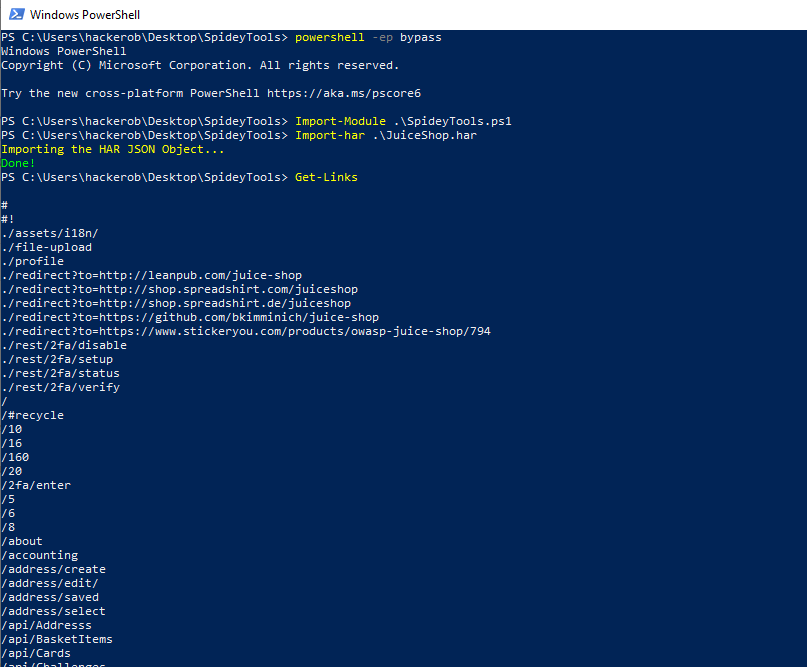

- Use SpideyTools to Find Hidden Pages

Open PowerShell and bypass those security warnings: powershell -ep bypass. Import the SpideyTools tool: Import-Module ./SpideyTools.ps1. Import the HAR file: Import-Har -file ./testsite.har. Run Get-Links which should print a list of URLs extracted from links on the different pages as well as any URLs extracted from the JavaScript code used by the web application. Now in that same tab you were using, navigate to any URLs that you did not previously. Run Extract-Har again and rinse and repeat until you have exhausted all the URLs that the web application exposes.

- Use SpideyTools to Create CSV Report

You are now ready to run Save-AttackSurface which will save all this traffic to a nice CSV file which you can open in Excel. Although it may still be somewhat I at least find it much more enjoyable and maybe you will too.

Thanks for reading and stay tuned as I add more useful tools to SpideyTools.